6.0 Report results: Introduction

Overview Introduction Options, with examples Results and next steps

— M. Granger Morgan and Max Henrion in Uncertainty: A Guide to Dealing with Uncertainty in Quantitative Risk and Policy Analysis

Audiences for your results

Although this is nearly the last step in the process of designing and implementing salmon monitoring programs and analyzing the resulting data, careful attention must be paid to reporting results in a timely and understandable manner to various audiences. Those audiences are best identified by looking back at the goals and objectives for the salmon monitoring program that you identified when working through Step 1 of this web site. For example, you may have stated that you intend to determine the status and time trends in abundance, productivity, and/or diversity of particular salmon populations. You may have stated that you aim to understand the relative importance of human vs. natural mechanisms causing observed changes in those characteristics of salmon populations. In all cases, your monitoring program's results will likely be useful for one or more of the following groups:

- Managers/policy makers in organizations such as fisheries management agencies, the North Pacific Anadromous Fish Commission (NPAFC), and research- or administratively-oriented non-governmental salmon conservation organizations (NGOs) like the State of the Salmon program in Portland, Oregon, U.S.A.

- People associated with local groups such as Watershed Councils, Stream-keepers, and other action-oriented salmon-oriented groups that conduct monitoring programs and make decisions about actions to take based on the results of their monitoring

- Scientists who design monitoring programs and analyze the resulting data

- Technical staff who implement monitoring designs in the field.

Importance of communicating clearly

Scientists who analyze data collected from monitoring programs obviously want their results to be useful to assist with decision making or planning of future activities. However, as we scientists who developed this web site are acutely aware, scientists tend to write as if their readers are other scientists, even if their audiences include one of the other groups listed above. We should aim for the opposite of what is shown in the cartoon below. That is, we should speak clearly and simply about complex topics.

By Doruk Golcu, from <http://selections.rockefeller.edu/cms/science-and-society/bridging-the-gap-improving-science-communication.html>

Communication can be done clearly by following guidelines for writing and presenting data to non-scientific audiences. Some ideas are presented below and on the next web page, "Options, with examples".

Below are two concrete examples that demonstrate the value of communicating effectively. In the section after these examples, we provide generic suggestions for improving communication.

- The first example purposely does not deal with salmon, but rather with the U.S. Space Shuttle program. We chose this non-salmonid example to make a generically applicable point about the need to think hard about how to present results of technical analyses.

- The second example deals with monitoring of aquatic resources, including fish, but it relates to the slightly different aspect of what scientists need to communicate, rather than how they do so.

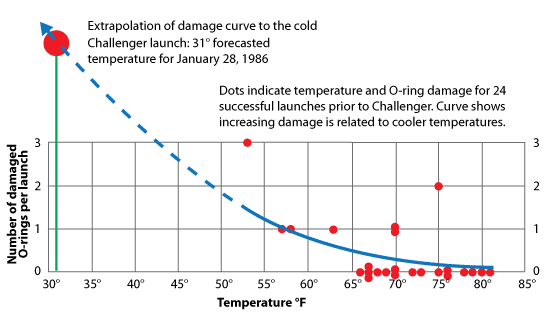

Example 1. One of the world's experts on visual communication of data, Edward Tufte (Tufte 1983, 1990, 1997, 2006), pointed out a key flaw in the information flow between technical staff and decision makers regarding the decision of whether to launch the Challenger Space Shuttle in below-freezing weather in January 1986 (Tufte 2003). A decision to proceed with the planned launch was considered risky because a Shuttle launch had never been done in such cold temperatures. During discussions the night before the launch, several pages of information were sent to NASA officials in Florida from the technical staff at Morton Thiokol in Utah (the company that made the solid-fuel booster rocket). Those pages contained lists of short bullet points that contained numerous incomplete sentences, poorly labeled headings, and unclear presentations of data. Transcripts of the associated phone calls showed intense discussions but inadequate focus on THE key issue -- that those temperatures had never been encountered in a previous Space Shuttle launch.

What should have been done instead of sending PowerPoint-style lists of points? One is example is given by Edward Tufte, who took the information showed that, compared to the pages of bullet points and data that had been sent to NASA by Morton Thiokol, a simple graphical presentation (Figure 1) could have indicated much more clearly to NASA launch managers in Florida that a launch under the existing conditions would have been entering very risky and unknown territory.

- Edward Tufte's figure on the 1986 Challenger Space Shuttle launch decision. From http://revcompany.com/blog/2009/08/28/information-overload-comprehension-underload/

The launch proceeded and the Space Shuttle exploded. It was later determined that this was due to a seal on a booster rocket malfunctioning due to inflexibility caused by the cold.

Example 2. Most of this Step 6 discusses how to present results of monitoring programs. However, there is another important issue that must not be forgotten. Scientists involved in designing monitoring programs need to show decision makers and those who fund monitoring programs the value of implementing statistically rigorous, well-planned programs. The most useful monitoring programs have been those that were well-designed and that used probability-based features to measure biota directly. In contrast, programs are less useful if they do not use statistically-based designs or measure water-quality surrogates to represent biological status. Accountability is needed for the usefulness of salmon monitoring programs. In a parallel situation, Paulsen et al. (1998) documented that literally hundreds of billions of dollars have been spent on reducing water pollution, yet surprisingly few monitoring programs have provided data of sufficient quality to determine whether those dollars have resulted in benefits to aquatic biota.

Next: | Options, with examples | Go back